Quenching the AI Thirst Trap

AI has been the topic at the forefront of every conference, annual meeting, tech dinner, etc.

And most folks love to talk about the fear of an AI overlord or sentient killer robots. Worrying about cases like

Terminator

The Matrix

Ex Machina

Wall-E

That creepy cyber dog in Black Mirror

But if you ask me, we’re more likely to land in the world of Mad Max before that of any killer robot.

After all, building the kind of sentience we fear just might drain our natural resources long before we can get to robots that consider using us as their homegrown battery source. And there’s no point in fearing a future we may not even make it to.

Because AI is hungry… and thirsty.

Look at some of these reports from the last two months alone…

- The Washington Post reports "AI is exhausting the power grid. Tech firms are seeking a miracle solution."

- Wired reports that the Internet has reached an age of “hyper consumption” and AI is to blame.

- Goldman Sachs projects that AI will drive a 160% increase in energy demand thanks to data centers.

- Black Rock’s Systematic Chart of the Month illustrates the impact of AI on electric power demand.

- Axios’s coverage of a recent Electrical Power Research Institute report paints a grim picture of the localized cost of data centers.

- A closer look at the carbon footprint of ChatGPT from Piktograph.

- The Brussels Times says ChatGPT consumes 25x the amount of energy as Google.

- Forbes thinks AI is pushing us towards a global energy crisis.

Here’s a basic breakdown:

Supercomputers made up of specialized GPUs consume massive amounts of electricity in hyperscale data centers. The electricity can come from various sources including green solutions, fossil fuels, biothermal energy, and more.

These supercomputers run 24/7, continuously generating heat that has to be dissipated or things literally start to melt.

The common method for cooling consumes water either through evaporation in cooling towers or by transferring heat away in a closed-loop system.

Why water? Because water has a high specific heat capacity (4.186 J/g°C).

This just means that it can absorb a high volume of heat before it gets too warm, and it can be circulated continuously through the data centers to provide consistent cooling to machines that are never turned off.

Remember when we used to build custom gaming PCs? To use the latest and greatest graphic cards (Nvidia even in 2010), we had to pair it with a liquid cooling system.

This is basically that…on a massive scale.

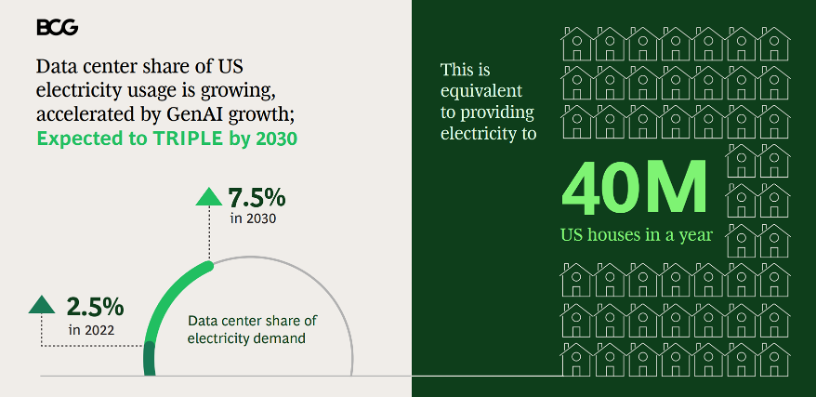

Boston Consulting Group predicts that by 2030, data center energy consumption will triple - using 7.5% of our electric power (~390 TWh). In 2022, that stat was just 2.5% of the U.S. total (~130 TWh).

The International Energy Agency has looked deeper at cost per function:

A Google search uses 0.3 Wh per request. (That’s the same as turning a 60W light bulb on for 17 seconds.)

A ChatGPT search uses 2.9 Wh per request. That’s nearly 10x.

An “average” conversation with ChatGPT includes between 20-50 prompts.

Each conversation would be using 58 to 145 Wh.

An average US household consumes around 29 kWh per day.

Around 4% of that is on lighting, i.e. around 1200 Wh.

So, 10 conversations equals an entire US household’s lighting for a day.

And guess what?

Each conversation also needs around one 500ML bottle of fresh water as coolant.

The national average of household water usage in the US is 227 to 264 liters per person per day. And to stay healthy, you need to consume between 2.7 and 3.7 liters per day.

So, 5 conversations equals healthy hydration for 1 person for a day.

(For context, UNESCO reports that 26% of the global population do not have clean drinking water.)

Individually, each conversation might not seem like a lot.

But this is just the beginning.

ChatGPT usage is growing quickly.

Since breaking records to reach 100 million monthly visitors just two months post-launch in 2023, the numbers have only continued to grow. Now, 18 months post launch, 24% of Americans say they have used the service, and ChatGPT is already fielding as many as 10 million queries globally every day.

If ChatGPT conversations replace Google searches…

And Google searches are on the scale of 99k searches a second…

Imagine 99k conversations a second

That’s around 13,800 kWh every second – Enough to power around 1,400 Indian households for a whole day (an average Indian household consumes 300 kWh per month).

Every second.

That’s around 50,000 L of water a second – Enough to healthily hydrate 18,000 humans for a whole day.

Every second.

And it’s not just ChatGPT.

AI adoption is accelerating.

Every startup is an “AI” startup.

Evolving the AI costs energy and water. (For example, training a single AI model like GPT-3 requires as much as 700,000 liters of water.)

Every time we use AI through one of these services costs energy and water.

Now another question: What happens to the artificially heated water?

Some can be reused.

Some is lost to water vapor.

But a lot of it just has to be discharged back into the environment.

Likely we discharge it back into the ocean.

The ocean which covers 71% of the globe already absorbs the majority of excess heat. And for the last few years, we’ve been in the midst of an oceanic heatwave. Like all heatwaves, the effects can be deadly.

Storms are more violent.

Fish populations migrate or die.

Algae blooms flourish.

And, warm water leads to elevated sea levels; so, we lose land.

The National Environmental Education Foundation has reported that, recently, the sea levels have risen roughly an inch per year. For reference, from 1901-1992, sea levels only rose 0.04 to 0.1 inch per year from 1901-1992.

For every inch the sea rises, we lose 50-100 inches of “beach.”

Remember, the coastline isn’t all beach.

Cities like San Francisco, Boston, New York and Miami will have to fortify infrastructure and relocate people and homes, or the cities themselves will drown. For example, if Boston Harbor water level rises 3.5 feet (which is a possibility by 2070 according to the Mystic River Watershed Association), entire parts of Chelsea, Everett and Boston would flood twice daily.

Here’s where we get closer to a Mad Max (or Waterworld) future.

Our use of AI accelerates our consumption of energy and exhaustion of natural resources.

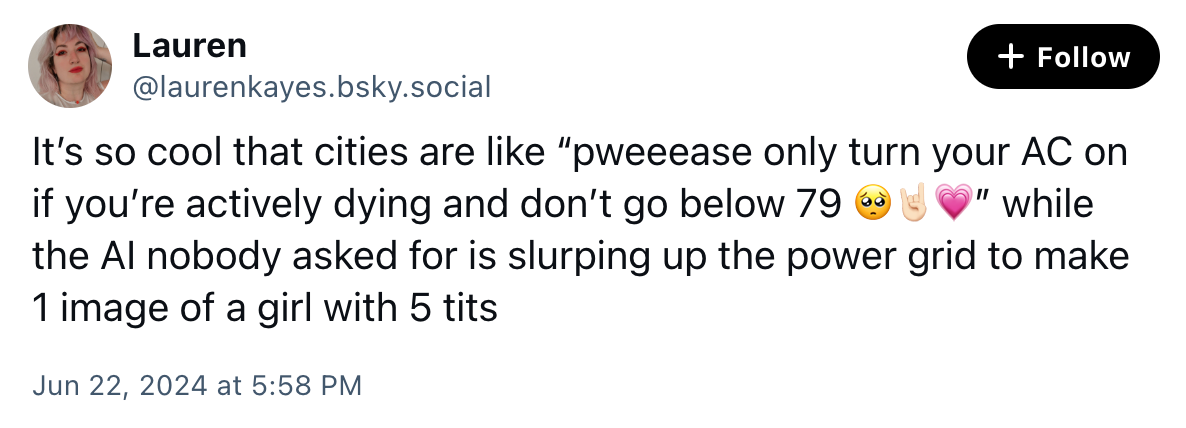

I mean, we’re out here fielding power company warnings amidst the recent heat waves while converting to low-flushing toilets…. As we ask ChatGPT to write us our bios as rap songs.

Companies like Apple, Google, Meta, Microsoft, and more are gobbling up energy resources to satiate our curiosities and our hunger for more “miracles.” They try to offset this consumption with green energy alternatives, but it’s still not enough. Already some are wondering whether the admirable goals of some are unreachable.

So, we’re at a real crossroads: Innovate or fry/drown.

Perhaps before we start worrying about the Terminator, we should worry about how we find new ways to power, to cool, to run “the AI”.

Bill Gates and Microsoft are betting on fusion.

Google is betting on futuristic geothermal solutions.

Sam Altman is investing in small nuclear plant solutions local to data centers they power.

But what else is possible? What “science fiction” can be made real?

As we hover on the edge of known sciences, the importance of investing in accelerating the discovery or the realization of new sciences is increasingly important. We’ve discussed various aspects of this in previous Science Fair issues:

- Scientific brain drain & the innovation cliff

- AI’s potential to catapult invention & innovation

- Our collective preparedness gap as we need to invest in more science-related technologies than ever while much of our VC community lacks the lab and research experience to tell viability from science fiction.

I believe that AI is that hit of NOS to accelerate how we get to these new frontiers much faster. (And this is worth the short-term resource costs.)

And there will be emerging companies who will harness AI to successfully forge the keys that unlock these new frontiers.

They will have an immense impact on our world

… And also just may happen to make a fuckton of $$ along the way.

**ChatGPT was not used in the creation of this post**